The goal of diplomacy is to avoid stupid wars, not all wars

Don't blame computers when you model wrong

The most basic thing I've learnt is that the point of diplomacy and international treaties isn't to prevent war. That's seen as impossible. The goal is to eliminate wars based on miscalculations. Stupid wars result in destruction and suffering for no reason. If only you could honestly communicate your commitment and abilities, then maybe any issues would be resolved without violence at all. Although the only way to really, honestly communicate abilities and commitment is unfortunately to periodically display them. This is part of why zero war is not seen as likely to be achievable.

|

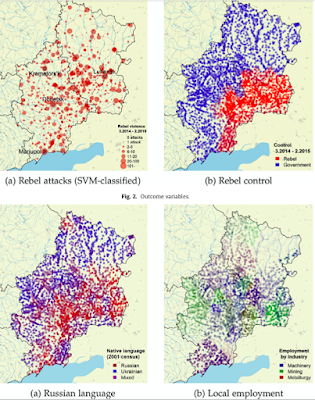

| Zhukov, Yuri M. "Trading hard hats for combat helmets: The economics of rebellion in eastern Ukraine." Journal of comparative economics 44, no. 1 (2016) I know the title is politically incorrect, but the article shows that neither speaking Russian nor being ethnically Russian predict backing Russia in Ukraine. Rather, high economic inequality does – an outcome of collapse of heavy industry. |

If we've managed to make it really stupid to fight an offensive war, that's a pretty good outcome. The only really well motivated offensive war I can think of in my lifetime was the invasion of Cambodia by Vietnam, which occurred after Pol Pot has already killed 1/4 of his citizens. Arguably, NATO actions against Kosova and Libya were similar, and also what NATO intended to do in Syria before Obama took that one walk in the White House rose garden. These actions and inaction all created ongoing messes, but perhaps the interventions created more merciful messes than what they replaced, or what did happen in Syria. I'm not informed enough to say.

But I know a lot about modelling. I know it's not useful to blame the miscalculations of Putin – and many other people, including in the West – on some mystic or supernatural capacities of the Ukrainian people. Yet these miscalculations seem to be ongoing. Over the weekend I was enraged to see that Marine Corps Commandant General David Berger had claimed that computer models "didn't and couldn't" account for "the Ukrainian people's will to fight for their homeland." Maybe those models didn't, but they so easily could have. Or rather, the people that wrote them could have set that level correctly. |

| Click the picture to see this in twitter, or click here to see the article Gady is quoting. |

The Ukrainian people know what they want, they know what they've lost, and they know their enemy. They know what life is like in the parts of their country that have been occupied by Russia since 2014, and how it is over their various borders. They also know that their enemy is a set of human beings exactly like them making belittling decisions, and they are rightly outraged. And at least some of them know how much military training and support their forces have been receiving since 2014, and how much intelligence support they are receiving now, and think they have a chance.

Let's not mystify this process. And I can't say this strongly enough – let's not pretend that computer simulations can't model basic social dynamics because they're so f**king mystical. Scientists model emotions and social dynamics every day. I personally have published models of this kind of polarisation, and I'm hardly the only one. If there really is this model Gen Berger describes that included an underestimate of the Ukrainian people's willingness to fight, then the people who built it made a mistake. People were wrong about the inputs. At this stage we need to reassess everything those who are surprised by what's happening think they knew, and then use our improved understanding to figure out how to conclude this war with the minimal further damage. When we see our models are wrong, we should then learn something about the world and fix them.

And while I'm ranting – this isn't only about Putin. Putin is being directly supported by many overly-wealthy people in Russia, London, the US, and other places. And he's being indirectly enabled by everyone who thinks it's OK for individuals to have this much power and seeks to improve their own wealth and power by disrupting regulatory efforts designed to ensure that democratic governance can limit these kinds of excesses. This is another way that I have come to expertise about this – because I work in digital governance. Consequently, I have been learning about market concentration and its relationship to "big tech" and the entity formerly known as GAFAM (MMAAA – bleat it like a sheep, then say "wake up sheeple"). I've known since 2016 that inequality was a posited major factor in WWI. I've also known this was an even more important ethics issue than bias in AI because this time it's not just a potential world war, it's a potential world war during a climate crisis.

See also my previous blogpost: Paying a cost to harm others more: Subtractive asymmetric balancing, antisocial punishment, and Ukraine's nuclear power plants, also based on some of my published science. Science can explain what's going on, you just have to want to know – and want it even more than you want to be rich.

Comments

The main takeaway of this specific post is meant to be boldfaced but doesn't look so in my chrome browser on my laptop (though it does on my iphone safari) "At this stage we need to reassess everything those who are surprised by what's happening think they knew, and then use our improved understanding to figure out how to conclude this war with the minimal further damage." The reason I talk about my expertise is that I've learnt that not everyone knew that some major security organisations thought I was qualified to speak on security, which I bring up just to reenforce this important message. Sorry to throw in arguments from authority; mostly this is meant to be arguments from science or by reason, but it's an important enough topic I threw in everything I had.