Explaining limit cases: A partial rebuttal of Gunkel, Nyholm, and others' interpretation of "Robots Should Be Slaves"

8 September 2025

A number of people have positioned me in a space where I said that I believed it was possible to create AI that deserved personhood, but that it was a bad thing to do – that we could but shouldn't make AI or robot persons.

This is half right. The original book chapter said that it was so obviously and profoundly wrong to own people that it was irrelevant whether or not we could build them. The original book chapter was agnostic about whether that was a possibility.

As computer programmers, we often worry about corner cases. That is, quite often in software we need to do something a number of times, or for some set of data. And we create a program that does that right except in exceptional cases, like when there's no more data. So great programmers have to be great at thinking about and checking corner case. I have been a great programmer, though I'm out of practice now.

Computer scientists (and mathematicians) though also like to use logic to avoid having to do empirical work on something that will never work. One of the tricks of logical proofs is called "taking the limit case." If you can show something is true for even the most extreme cases, then you don't have to worry about what happens as you approach those extreme cases. This is what my book chapter tried to do. People were wondering when we'd need to start extending moral patiency to robots, and I was trying to say "never, because creating such a commercial product would be an anathema to our entire moral system."

Corner cases and limit cases aren't quite the same thing, though they are related. But I think that might be what's confused people – they took my limit-case argument as handling a corner case.

Moral philosophers have come up with other arguments for AI patiency, such as virtue ethics and Kantian (over)identification, but the chaos of the present moment in my opinion entirely vindicates my original argument. And notice that both EU law and the UNESCO global recommendation on AI ethics supports this perspective too. For right now at least, governments have agreed globally that AI legal personhood would be just an invitation for corruption, that law must be centred on human responsibility.

In my opinion, what that means in terms of Kantian (over)identification is that we have a moral obligation to make it transparent that AI is not a person. More often it is an overly-cute interface on a transnational corporate platform service deep in the corrosion of surveillance capitalism. We shouldn't harm ourselves by treating something we identify with badly – rather, we should use products that don't falsely present as overly similar to ourselves. And if you are looking for a way to express virtue, please focus on the 8 billion human beings who need your help, or the ecosystem that sustains them.

Applying ethics we've derived to handle humans and other animals – which are evolved and presented to us by nature – to questions of artefacts, which we design, is flawed. It is a category error. It doesn't work. It just creates moral hazards, via commerce.

Original post, including subsequent commentary and apology for exploiting the word "Slaves"

4 October 2015

There's a continuum about how much a robot is like a human; they will always be more human than stones in that they compute sense & act, but they don't need to be anthropomorphic. However, as much as I hate elevating fiction to the same conversation as real engineering, if you are looking at RUR or Blade Runner, you are positing modified clones. I would not call these "robots". Such an approach would clearly inherit the issues apes have evolved with subordinance, and thus owning them would be unethical and permanently damaging to them. Clones should NOT be slaves.The last line alludes to my old talk and book chapter Robots Should Be Slaves. I originally used that line to clarify that it was obligatory not to build robots we are obliged to, that they should not be human-like because they will necessarily be owned. However, I took it for granted that humanity has agreed that humans cannot be slaves, and assumed I was transparently asserting robots' inhumanity. In fact, I realise now that you cannot use the term "slave" without invoking its human history, so I don't use this line in talks any more. But the founder of Machine Question, Dave Gunkel, keeps bringing that paper up so I felt safe alluding to it here.

Update 31 July 2016: Update for the Westworld controversy: I don't need to say anything, just direct you to Why do we give robots female names? Because we don't want to consider their feelings. Laurie Penny is wrong to assume that AI will also necessarily suffer from emotional neglect, but right to think AI ethics is a feminist issue.

Update 9 March 2016: I had a big discussion with someone on twitter yesterday about this, Chris Novus. Chris was giving a pretty standard futurist / transhumanist line, that there was no way that it would be OK to call a sentient robot a "robot" or a "slave", these terms were too demeaning. By "sentient" he meant basically "moral subject", and yes, I totally agree we shouldn't own moral subjects. But there's also a political and economic reality is that if we manufacture something, we'll own it. So we shouldn't manufacture moral subjects, so we should choose to build robots that do not have the critical traits of moral subjects. But simple intelligence (connecting perception to action) does not in itself make something a moral subject. Again, this paragraph is just a precise of my book chapter, Robots Should Be Slaves, people who read past the title already know this.

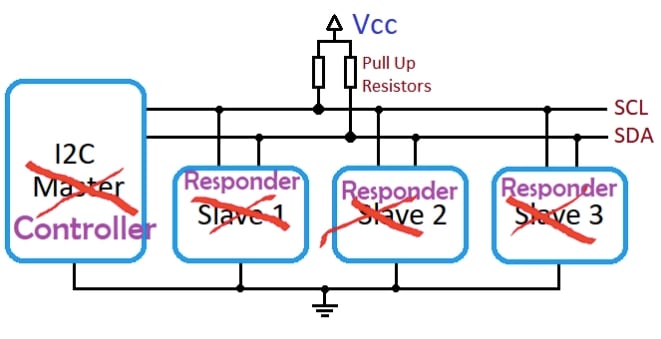

Update 6 February 2021 Sitting in the 2020s, it may now seem insane that I could ever have come up with a slave metaphor, but those actually was fairly pervasive in electrical engineering, including computer science, but that's now being reconsidered. Cf. this wikipedia page, or less mutably, this articled by Tyler Charboneau, How “Master” and “Slave” Terminology is Being Reexamined in Electrical Engineering

|

| Figure from Charboneau's 2020 article, originally from another IEEE blog. |

Comments